我有一个新项目,要将 REST 响应中的信息存储到 IRIS 数据库中。我需要同步来自至少二十几个不同 REST 端点的信息,这意味着需要创建将近这么多的 ObjectScript 类来存储来自这些端点的结果。

我能否使用 ChatGPT 来创建这些类?答案是 "可以",这非常好,因为这是我第一次尝试使用生成式人工智能来做一些有用的事情。生成长颈鹿喝汤的图片有点老....。

我是这么做的:

- 使用 "curl "查询端点,获取一些 JSON REST 调用输出示例。

- 访问 ChatGPT(InterSystems 为员工提供全公司范围的许可证)

- 与 ChatGPT 进行以下对话。

我在 "Message ChatGPT "提示符中输入了以下请求:

我:Generate an ObjectScript class that will hold data from the following JSON(生成一个 ObjectScript 类,用于保存以下 JSON 中的数据):

在输入 JSON 之前,我点击了 "Return",因此 ChatGPT 给我的提示如下:

ChatGPT:Please provide the JSON structure you'd like me to generate the ObjectScript class for. If you have an example JSON object, paste it here, and I'll create the corresponding InterSystems ObjectScript class for you(请提供您希望我生成 ObjectScript 类的 JSON 结构。如果您有一个 JSON 对象示例,请将其粘贴到这里,我将为您创建相应的 InterSystems ObjectScript 类)。

我输入了以下内容作为Prompt:

{

"id": 335818632,

"appId": 113,

"appName": "Batch",

"contentId": 182455380233,

"subject": "Test Subject",

"name": "Test email campaign name",

"counters": {

"processed": 3910,

"deferred": 86,

"unsubscribed": 17,

"statuschange": 17,

"bounce": 153,

"mta_dropped": 8,

"dropped": 299,

"delivered": 3749,

"sent": 3903,

"click": 16,

"open": 323

},

"lastProcessingFinishedAt": 1733232499000,

"lastProcessingStartedAt": 1733232303000,

"lastProcessingStateChangeAt": 1733232499000,

"numIncluded": 4201,

"processingState": "DONE",

"scheduledAt": 1733232300000,

"type": "BATCH_EMAIL"

}

ChatGPT 的回复:

下面是映射到所提供的 JSON 结构的 InterSystems ObjectScript 类:

Class MyNamespace.MyJSONData Extends (%Persistent, %JSON.Adaptor)

{

Property id As %Integer

Property appId As %Integer

Property appName As %String

Property contentId As %Integer

Property subject As %String

Property name As %String

Property counters As MyNamespace.Counters

Property lastProcessingFinishedAt As %BigInt

Property lastProcessingStartedAt As %BigInt

Property lastProcessingStateChangeAt As %BigInt

Property numIncluded As %Integer

Property processingState As %String

Property scheduledAt As %BigInt

Property type As %String

ClassMethod %OnJSONImport(jsonObject As %Object, instance As MyNamespace.MyJSONData) As %Status

{

Quit ..%JSONImport(jsonObject, .instance)

}

}

Class MyNamespace.Counters Extends (%SerialObject, %JSON.Adaptor)

{

Property processed As %Integer

Property deferred As %Integer

Property unsubscribed As %Integer

Property statuschange As %Integer

Property bounce As %Integer

Property mta_dropped As %Integer

Property dropped As %Integer

Property delivered As %Integer

Property sent As %Integer

Property click As %Integer

Property open As %Integer

}

以及解释:

.png)

这段代码是一个很好的开始,但我希望它能放入名为 "MyPkg"的软件包中,并将类命名为 "EmaiCampaign"。另外,通过使用一些 NI,我知道生成"%BigInt "属性的值实际上是 Linux 时间,该属性应为 %Library.PosixTime"。

我提出以下请求(不知道为什么要对机器说 "请";我想我父母做得很好):

我:Can we recreate the class again please, renaming the package " MyPkg" and the Class as "EmailCampaign"? Also, please change types of Properties from %BigInt to %Library.PosixTime(我们能否重新创建一个类,将包重命名为 "MyPkg",将类重命名为 "EmailCampaign"?另外,请将属性类型从 %BigInt 改为 %Library.PosixTime).

ChatGPT 的回复:

Here's the updated ObjectScript class with the specified package name and class name, and the timestamp properties updated to use %Library.PosixTime(这是更新后的 ObjectScript 类,指定了软件包名称和类名称,时间戳属性更新为使用 %Library.PosixTime):

Class MyPkg.EmailCampaign Extends (%Persistent, %JSON.Adaptor)

{

Property id As %Integer

Property appId As %Integer

Property appName As %String

Property contentId As %Integer

Property subject As %String

Property name As %String

Property counters As MyPkg.Counters

Property lastProcessingFinishedAt As %Library.PosixTime

Property lastProcessingStartedAt As %Library.PosixTime

Property lastProcessingStateChangeAt As %Library.PosixTime

Property numIncluded As %Integer

Property processingState As %String

Property scheduledAt As %Library.PosixTime

Property type As %String

ClassMethod %OnJSONImport(jsonObject As %Object, instance As MyPkg.EmailCampaign) As %Status

{

Quit ..%JSONImport(jsonObject, .instance)

}

}

Class MyPkg.Counters Extends (%SerialObject, %JSON.Adaptor)

{

Property processed As %Integer

Property deferred As %Integer

Property unsubscribed As %Integer

Property statuschange As %Integer

Property bounce As %Integer

Property mta_dropped As %Integer

Property dropped As %Integer

Property delivered As %Integer

Property sent As %Integer

Property click As %Integer

Property open As %Integer

}

以及以下解释:

.png)

将代码 "原样 "复制到 VSCode 后,大括号的语法问题凸显出来。ObjectScript 类无法启用内部类定义。

.png)

要求 ChatGPT 将 MyPkg.Counters 内部类作为一个单独的类来解决 "大括号 "问题是 "不可行的";人类需要将该类移到另一个文件中。ChatGPT 在收到以下请求后确实重新命名了该类:

我:The class as it is written does not compile. Can we move the internal class named MyPkg.Counters out of the main class into its own class and rename it to MyPkg.CampaignCounters?(现在编写的类无法编译。我们能否将名为 MyPkg.Counters 的内部类从主类移到自己的类中,并将其重命名为 MyPkg.CampaignCounters?)

ChatGPT 的回复:

Here's the updated ObjectScript code with the MyPkg.CampaignCounters class moved out as its own class(以下是更新后的 ObjectScript 代码,其中 MyPkg.CampaignCounters 类被移出作为自己的类)

Class MyPkg.EmailCampaign Extends (%Persistent, %JSON.Adaptor)

{

Property id As %Integer

Property appId As %Integer

Property appName As %String

Property contentId As %Integer

Property subject As %String

Property name As %String

Property counters As MyPkg.CampaignCounters

Property lastProcessingFinishedAt As %Library.PosixTime

Property lastProcessingStartedAt As %Library.PosixTime

Property lastProcessingStateChangeAt As %Library.PosixTime

Property numIncluded As %Integer

Property processingState As %String

Property scheduledAt As %Library.PosixTime

Property type As %String

ClassMethod %OnJSONImport(jsonObject As %Object, instance As MyPkg.EmailCampaign) As %Status

{

Quit ..%JSONImport(jsonObject, .instance)

}

}

Class MyPkg.CampaignCounters Extends (%SerialObject, %JSON.Adaptor)

{

Property processed As %Integer

Property deferred As %Integer

Property unsubscribed As %Integer

Property statuschange As %Integer

Property bounce As %Integer

Property mta_dropped As %Integer

Property dropped As %Integer

Property delivered As %Integer

Property sent As %Integer

Property click As %Integer

Property open As %Integer

}

将上述代码粘贴到 VSCode 并编译后,编译器报告说 ObjectScript 中不存在名为"%Object "的类型。根据 IRIS 文档,%JSON.Adaptor 的%JSONImport()方法的第一个参数的属性是灵活的:

"输入参数可以是作为字符串或流的 JSON,也可以是 %DynamicAbstractObject 的子类。

删除第一个类中 %OnJSONImport() 第一个参数的 "As %Object "属性并将两个类分离到不同的文件后,MyPkg.EmailCampaign 类编译成功。但是,MyPkg.CampaignCounters 无法编译;ChatGPT 还不知道下划线是 ObjectScript 中的特殊字符(字符串连接操作符)。

让我们试着教教它。

我:The underscore character "_" is a special character in ObjectScript. Please change properties with an underscore in their names to camel case names and remove the underscore(下划线字符"_"在 ObjectScript 中是一个特殊字符。请将名称中带有下划线的属性改为驼峰式名称,并去掉下划线)。

ChatGPT 的回复

Here is the updated code with properties that had underscores in their names converted to camel case(以下是更新后的代码,将名称中含有下划线的属性转换为驼峰大小写)

Class MyPkg.EmailCampaign Extends (%Persistent, %JSON.Adaptor)

{

Property id As %Integer

Property appId As %Integer

Property appName As %String

Property contentId As %Integer

Property subject As %String

Property name As %String

Property counters As MyPkg.CampaignCounters

Property lastProcessingFinishedAt As %Library.PosixTime

Property lastProcessingStartedAt As %Library.PosixTime

Property lastProcessingStateChangeAt As %Library.PosixTime

Property numIncluded As %Integer

Property processingState As %String

Property scheduledAt As %Library.PosixTime

Property type As %String

ClassMethod %OnJSONImport(jsonObject As %Object, instance As MyPkg.EmailCampaign) As %Status

{

Quit ..%JSONImport(jsonObject, .instance)

}

}

Class MyPkg.CampaignCounters Extends (%SerialObject, %JSON.Adaptor)

{

Property processed As %Integer

Property deferred As %Integer

Property unsubscribed As %Integer

Property statusChange As %Integer

Property bounce As %Integer

Property mtaDropped As %Integer

Property dropped As %Integer

Property delivered As %Integer

Property sent As %Integer

Property click As %Integer

Property open As %Integer

}

以及以下解释:

.png)

ChatGPT 不遗余力地将另一个以两个独立单词命名的属性也改成了驼峰字体(statuschange);我保留了这一改动,因为主类中其他类似名称的属性都是驼峰字体。

最新生成的代码分为两个独立文件(并删除了 %OnJSONImport() 方法定义中的 "As %Object "定义),所有代码都能成功编译。

这些生成的类是我所需的一个很好的起点,我将在这个项目的其他 REST 数据源中重复这个过程,这样就可以省去很多不必要的键入。

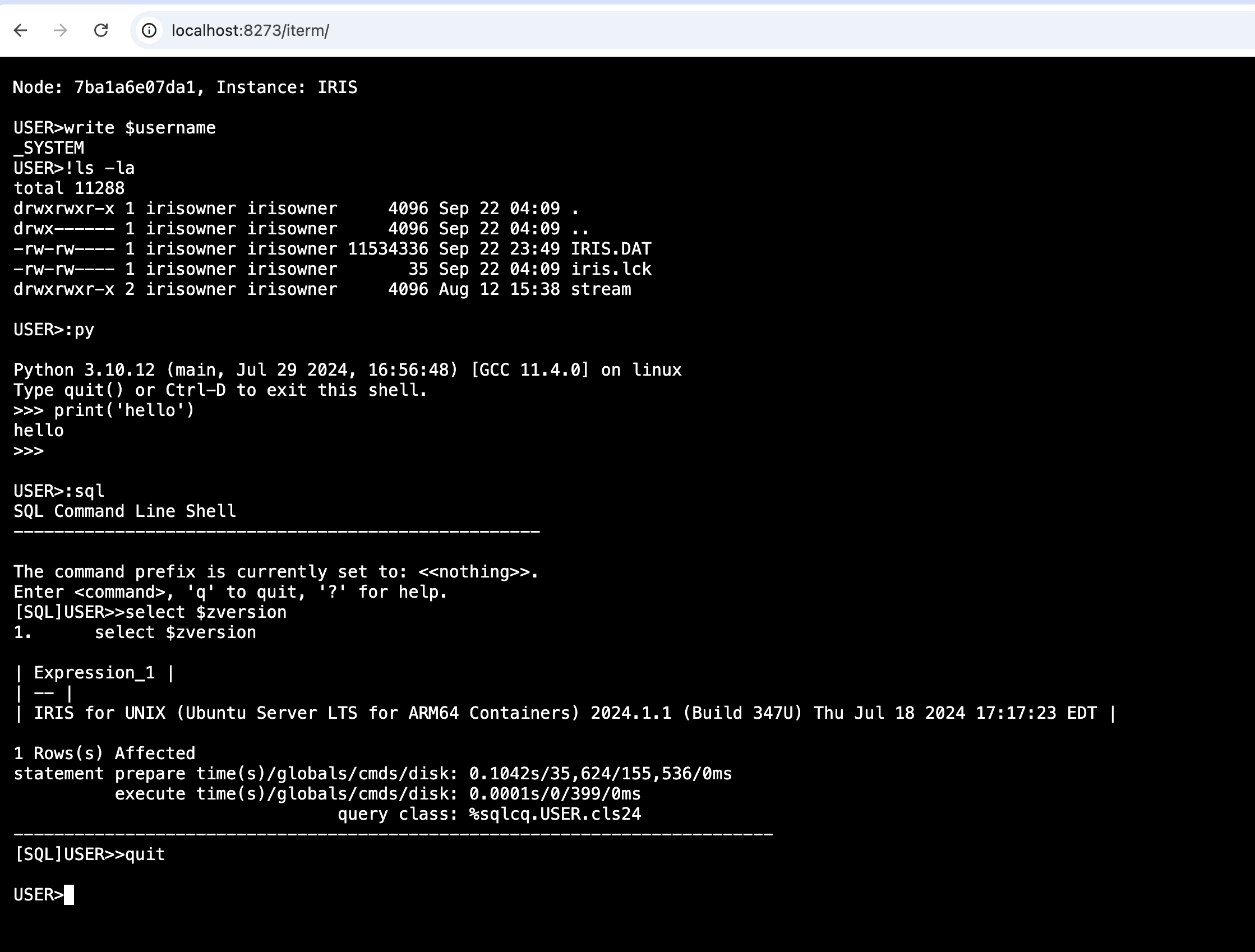

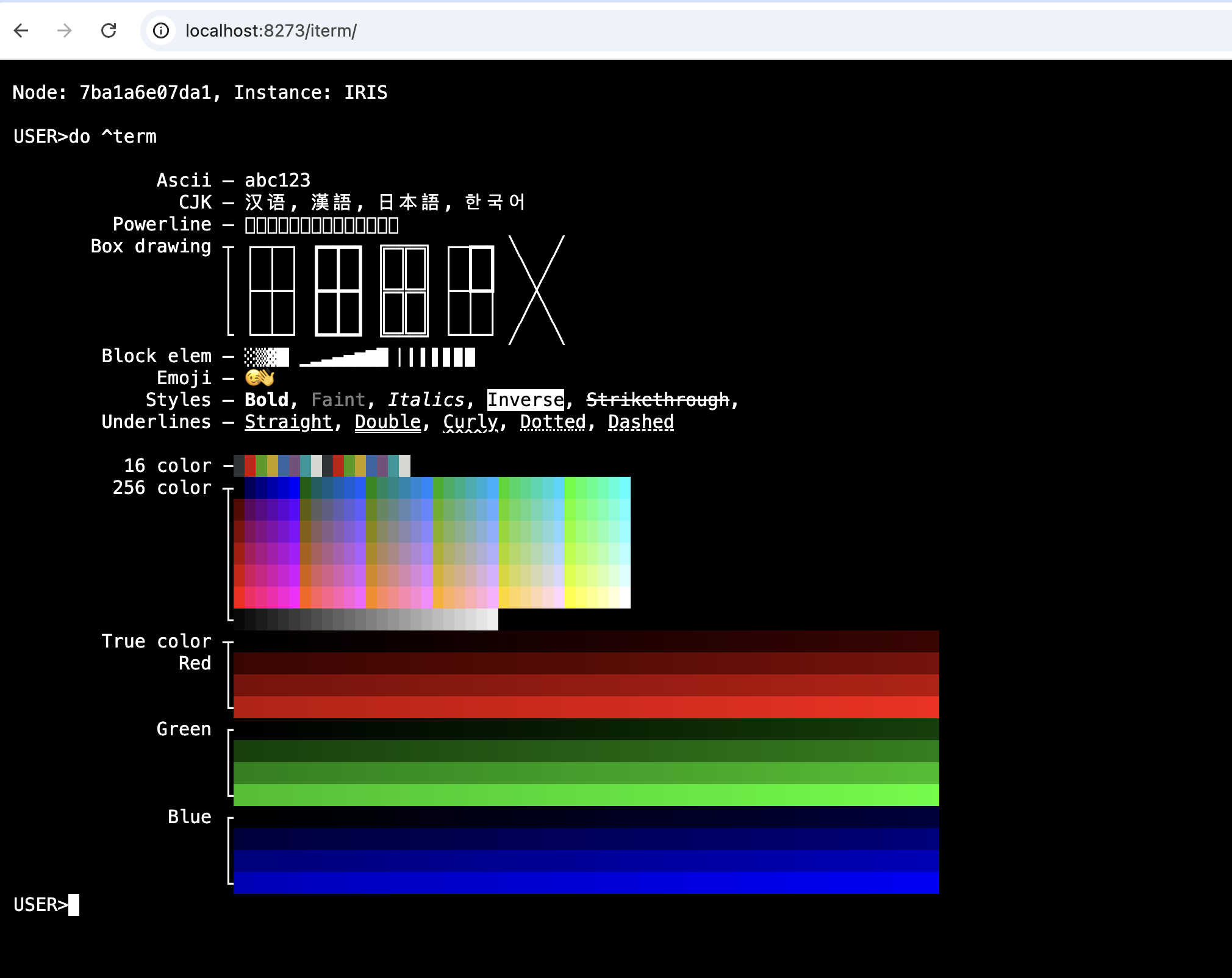

.png)

.png)

.png)

.png)